Aerospace, Oil & Gas, Pressure Measurement

Tech Brief: What is Line Regulation?

Every pressure transducer that supplies a high level DC output signal such as 0 to +/-5 Vdc or 4-20 mA should have the line regulation specified.

Line regulation is the ability of the transducer to minimize the output signal error should there be a change in the power that the transducer receives. This can happen if a transducer is calibrated at one power supply voltage in the cal lab but is put into service with a different power source. What would be the error caused by the difference in power supply voltages? The line regulation specification lets you calculate this.

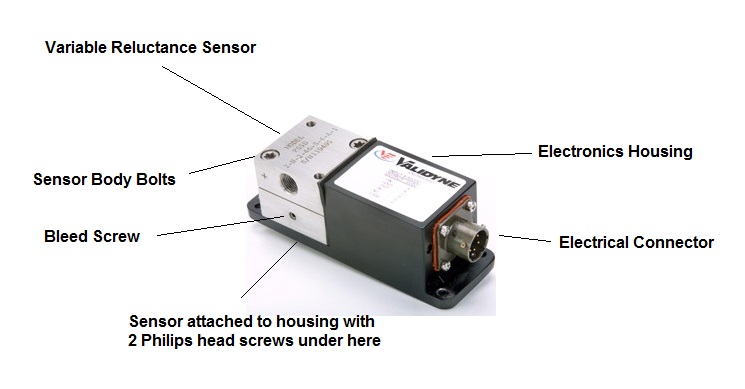

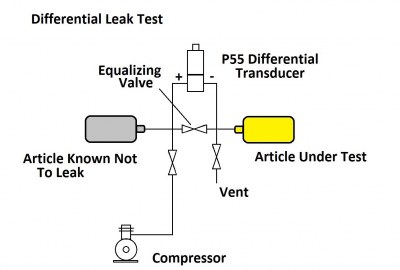

The P55, for example, specifies a line regulation of 0.02%. This means that any change in operating voltage – within its +9 to +55 Vdc limits – will result in a maximum error of 0.02% FS.

Suppose a P55 was calibrated for 0 to +5 Vdc output for 0 to 1 psid pressure, and the power in the cal lab was +12 Vdc. If that same P55 was installed in a system where the power supply was 24 Vdc, the maximum output error would be just 0.02% of the full scale of the transducer.

In this example the output shift due to line regulation would be just 0.0002 psi – a negligible error.

Line regulation in modern semiconductor circuitry has progressed to the point that output error due to power supply changes is essentially zero. One use of this specification, however, is as a symptom in trouble-shooting. A regulated transducer whose output changes greatly with power supply voltage probably has something malfunctioning in the electronics and should be repaired.

Leave a reply

You must be logged in to post a comment.